On Friday, I attended the Open Net Initiative launch conference at St Anne's college, Oxford. The report offered a systematic study of the extent of Internet filtering in a number of countries. The discovery that filtering is occurring in 25 of the 41 sampled nations has rightly drawn quite a lot of media coverage (for example, BBC online and IHT). If we accept the idea that the Internet has the potential to be a liberalising and democratising force (and that assumption is not as unproblematic as it might first appear, but that's a whole different blog post, so let's just run with it for a bit...), its potential to have real impact - especially on the most un-democratic and repressive regimes in the world - could be offset by filtering and blocking.

The research itself is a really interesting piece of work. It's outcomes are perhaps best represented topographically - as they are on the ONI website. These not only indicate the geography of filtration, but the type of content being censored. However, two important caveats need to be raised to the research as well.

Firstly, it was very much work in progress (as was emphasised during the course of the day). Only 41 countries were surveyed and there is still plenty of scope for developing comparisons. For example; to what extent do western countries also censure the Internet with regards to sexually explicit material (information on the map relevant to this is not derived by the ONI testing)? Even countries that were surveyed did not always get complete overage. The Russian survey, for example, really focused very heavily on Moscow. Local conditions across the country may be very different though.

Secondly, as a data-based study, the work of the Open Net Initiative leads to a whole host of normative questions, which were certainly at the forefront of my mind over the course of the day. In particular, if we leave aside the revolutionary potential of the Internet in authoritarian states, and try to imagine our ideal liberal democratic society, we still have to ask whether there would be a role for censorship in it (a point raised here and here)? There are compelling arguments to suggest there might be - after all, if we want to enforce a libel law, prevent crimes of incitement or the sexual exploitation of minors. But could we ever trust a government to do it responsibly, or would they inevitably abuse that power? This, of course, is not a new question (Thomas Hobbes had strong views on the dangers of pamphleteers - not surprising given the circumstances of his lifetime), but the Internet reframes it in new ways. The example of sixteenth century makes another important lesson very clear as well - censorship, or sometimes the lack thereof, is inevitably the product of political and social circumstances; the two topics are inseparable.

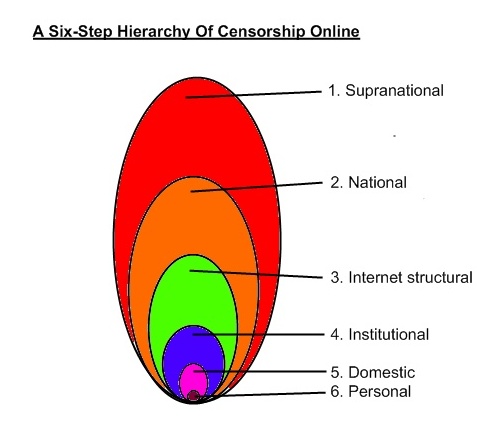

For this reason, I feel it is important to fit the work of the ONI into a wider framework of censorship and politics. The project focused on filtering; that is the use of technical measures to block access to specific web services. The most famous example of such a strategy is the Great Firewall of China. However, this only represents one type of censorship. Whilst attending a morning seminar with Professor Ron Deibert of Toronto University, I (slightly on the fly, I admit, as it had literally occurred to me then) sketched out a six-fold typology of censorship, which it might be worth thinking about in order to develop a multifaceted understanding of what is actually going on.

If we start at the very top level, there are supranational drives to censor. I guess the most notable example of these are multinational companies, especially those that are seeking to protect copyright and intellectual property. Additionally, attempts by the EU to regulate and control online activity would fall into this category (here's an example).

The next two levels are really those that concerned the ONI, as they represent the locations where most filtering occurs. Obviously, as the report makes clear, a number of governments block Internet content they regard as distasteful. Additionally though, there is often complicity between governments and what I will term structural elements of the Internet - either organisations that allow individuals to connect online (ISP) or to organise information (most famously, Google in China). What is really interesting about these two levels is the inter-relationship between them. Are the wishes of the government made explicit? Are they regarded as a condition of entry into the marketplace? What degree of autonomy do these structural actors have to choose what is censored and what is not? The particular "solutions" imposed vary from place-to-place, and that in itself tells us something about local political conditions.

Going to the next level, we can also see institutional censorship exists. We will all be familiar with this from our office or education environments - some sites are blocked, whilst others are banned, with a threat of punishment and retribution for access. A recent famous example is universities cracking down on students accessing peer-to-peer sites that might be used for illegal downloads (although it should be noted that the universities were themselves acting because they feared legal action from powerful multinationals. This illustrates the possible interactions between the various layers of this typology).

We then hit on the last two levels of censorship, which are both really interesting, but are, I would suggest, really hard to measure as well. Censorship undoubtedly occurs in the domestic environment. Indeed, some ISPs try to sell their products based on the domestic censorship controls that can be set at home. And of course, it is conceivable that in some domestic environments, family members might be banned altogether from Internet access, maybe based on their age or even their gender. This form of censorship would be especially significant it coincided with societal-wide attitudes on the role of women, for example. Finally, we find self-censorship, where individuals decide not to view material based on some notion of what is or isn't deviant on the grounds of social norm. It is also possible that self-censorship is bought about by the fear of consequences.

A bit messy, I concede, but I would be interested to hear if anyone has any views as to its viability and whether it is worthy of further thought.